What is the EU AI Act? Navigating Europe’s Rules for Artificial Intelligence

1/4/2025

•9 MIN READ

Artificial Intelligence is changing the landscape of modern business and daily life, influencing everything from personalized medical solutions to autonomous vehicles and advanced data analytics. This technological revolution brings vast opportunities for growth and innovation worldwide, prompting industries to rethink their operations and competitive strategies. Nevertheless, alongside its immense potential, AI also introduces significant ethical, societal, and regulatory challenges that require careful governance.

In response to these opportunities and challenges, the European Union has introduced the AI Act, the first comprehensive legislation dedicated explicitly to regulating artificial intelligence.

Officially published on 12 July 2024 and entering into force on 1 August 2024, the AI Act establishes clear objectives for AI regulation.

According to Article 1, its primary purpose is to "improve the functioning of the internal market and promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law, and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation."

This initiative underscores Europe’s ambition to lead globally in creating a trustworthy and human- centric AI ecosystem.

State of the art of AI in EU

The importance of governing AI becomes even clearer when considering its widespread adoption. According to Eurostat (2024), AI is increasingly becoming a staple in European businesses, with diverse technologies, from text mining and natural language generation to image recognition and machine learning, being integrated across sectors. Yet, adoption rates vary significantly across Europe: Denmark, Sweden, and Belgium demonstrate high adoption rates, while Eastern European countries lag substantially.

The European AI market has seen significant growth in recent years, especially in the field of Generative AI, a technology that can create text, images, audio, and more. According to Statista (2025), the market size of Generative AI in Europe has expanded rapidly, reflecting its rising adoption across industries. In 2020, the European Generative AI market was valued at approximately $1,71 billion, and by 2030, it is projected to surpass $110 billion, demonstrating a staggering growth trajectory. This expansion highlights not only the increasing reliance on AI-driven solutions but also the urgency of clear regulatory frameworks to govern their use.

Despite these advancements, Europe has historically lagged behind the United States and China in AI investment and adoption. European enterprises have been slower in integrating AI into business processes, partly due to concerns over data privacy, regulatory uncertainty, and fragmented markets.

According to the recent McKinsey report “Time to Place Our Bets: Europe’s AI Opportunity” (2024), Europe currently lags significantly behind other global regions, particularly North America, in terms of AI adoption, investments, and infrastructure. European businesses trail their US counterparts by approximately 45–70% in adopting AI technologies, with only 30% of companies in Europe utilizing AI in at least one business function compared to 40% in North America. Additionally, Western Europe falls behind the US by 61–71% in external spending on AI infrastructure, highlighting the continent’s investment gap.

Although Europe holds notable strengths in niche areas, such as semiconductor equipment for AI, where it commands 80–90% market share in extreme ultraviolet lithography, it remains underrepresented in many critical AI sectors. Europe’s relative disadvantage is further reflected in AI model creation, with just 25 of the world’s 101 prominent AI models originating from Europe, compared to 61 from the US. Furthermore, European AI startups are significantly underfunded; for instance, as of October 2024, France’s most prominent AI startup, Mistral AI, raised only $1 billion compared to the $11.3 billion raised by the US-based OpenAI.

Despite these hurdles, AI presents enormous economic opportunities for Europe, potentially adding $575.1 billion to the continent’s GDP by 2030 and boosting annual labor productivity growth by as much as 3%.

AI Act in depth

The European Union (EU) has introduced the AI Act, the world’s first comprehensive AI regulatory framework, to establish clear rules for AI development and deployment. This legislation aims to strike a balance between incentivizing innovation and safeguarding fundamental rights, ensuring that AI systems are transparent, accountable, and aligned with European values.

As AI continues to shape the global economy, Europe’s approach to regulation will play a key role in determining how businesses, governments, and society leverage these technologies. The AI Act is not just about compliance, it is about building a foundation for trustworthy AI, setting a global precedent for responsible AI governance.

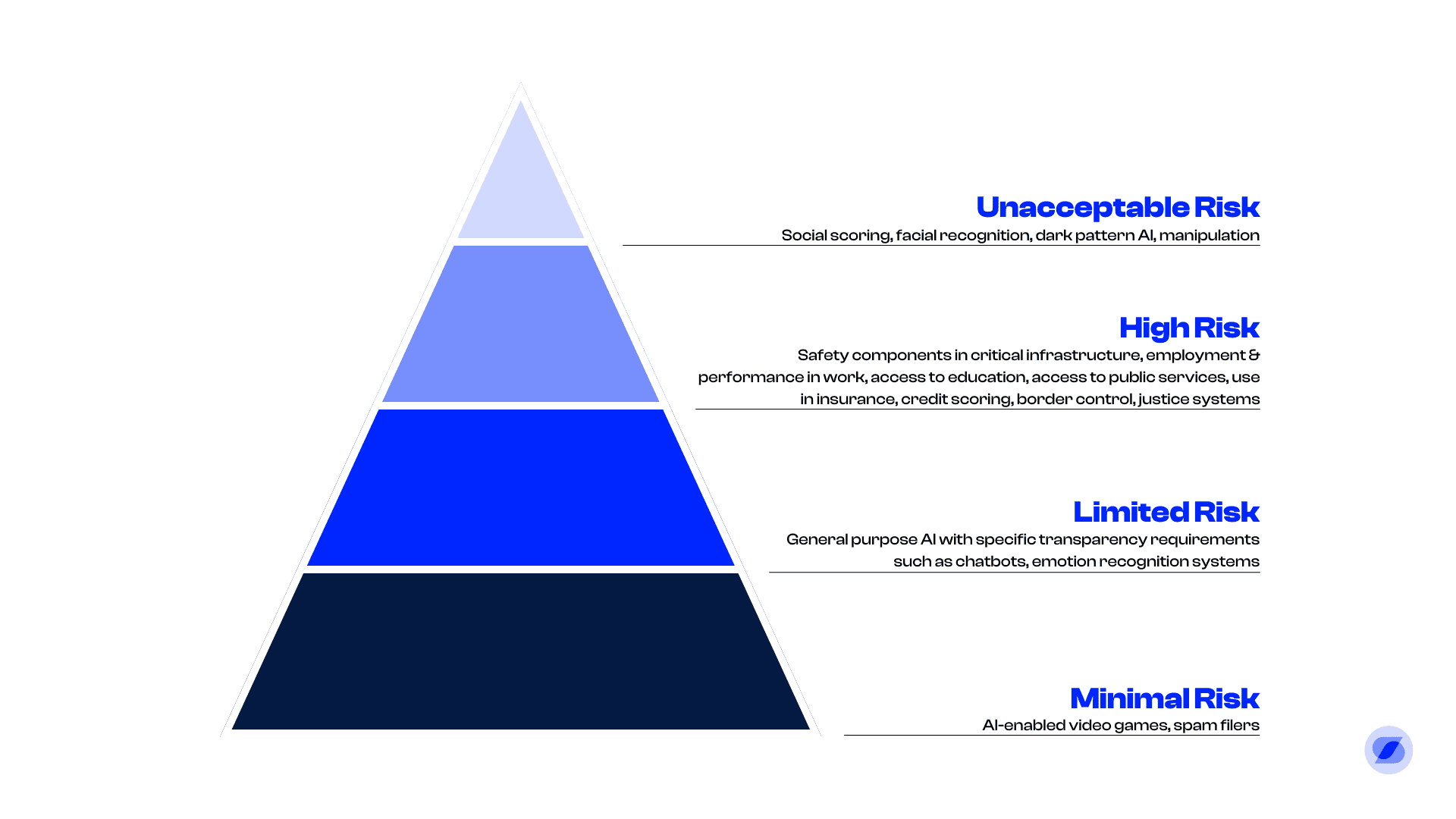

Recognizing AI’s vast potential alongside its considerable risks, the AI Act adopts a clear risk-based approach, categorizing AI systems into four distinct groups based on their potential harm to individuals and society:

1) Unacceptable risk

2) High-risk

3) Limited-risk

4) Minimal-risk systems

The 4 Levels of Risk

1) Unacceptable Risk

The AI Act explicitly defines the category of “Unacceptable-Risk AI systems” as those technologies considered inherently dangerous due to their significant potential to compromise individual rights, autonomy, and societal values. Chapter 2 of the Act clearly outlines specific types of AI systems that fall into this category and are therefore strictly prohibited.

Specifically, the Act prohibits:

• AI systems employing subliminal, deceptive, or manipulative techniques designed to distort an individual’s ability to make informed decisions.

• AI technologies exploiting the vulnerabilities of specific groups to distort their behaviors in ways that lead to significant harm.

• Social scoring systems.

• AI systems exclusively used for predicting the likelihood of criminal behavior.

• AI systems that engage in untargeted scraping of facial images from the internet or CCTV footage to build or expand facial recognition databases.

• Biometric categorization systems that infer sensitive personal attributes.

• Emotion recognition systems deployed in sensitive areas.

2) High-Risk

High-Risk AI systems, as detailed in Chapter 3 of the AI Act, encompass technologies with significant implications for public safety and fundamental rights. These AI systems, due to their sensitive nature and potential widespread impacts, require stringent regulatory oversight. They include applications in critical fields such as healthcare (e.g., medical devices), infrastructure management, education (e.g., student assessment systems), employment (e.g., recruitment tools), law enforcement, migration, border control, judicial processes, democratic elections, and financial services (e.g., insurance and banking).

To ensure safe and ethical use, the AI Act imposes detailed obligations on providers of high-risk AI systems. Providers must classify their high-risk AI systems into two clear subcategories:

• AI systems incorporated into products already regulated by EU product safety legislation, requiring third-party conformity assessments.

• Standalone high-risk AI systems used in specified critical sectors, which also undergo thorough compliance processes.

3) Limited-Risk AI Systems

The EU AI Act clearly defines the Limited-Risk AI systems category in Chapter 4, outlining systems that, while not inherently dangerous enough to be considered high-risk, still require specific transparency obligations due to their potential societal impact. Typical examples include generative AI applications like ChatGPT, which generate or significantly modify content. Such AI systems must adhere to clear regulatory obligations to ensure ethical and transparent use.

Limited-risk AI system providers must:

• Clearly disclose when content is AI-generated or AI-modified

• Prevent the generation of illegal or harmful content

• Provide transparency about training datasets

4) Minimal-Risk AI Systems

The EU AI Act, in Chapter 5, defines Minimal-Risk AI systems as applications with negligible or very limited potential to cause harm or adverse impacts on individuals and society. These are widely deployed, common AI technologies that we regularly encounter in everyday scenarios, such as spam filters, AI-enabled video games, chatbots for customer service, and inventory management systems.

Given their low impact, minimal-risk AI systems face very few regulatory obligations under the AI Act. Specifically:

• No Mandatory Compliance Requirements

• Voluntary Codes of Conduct

For business owners and executives using minimal-risk AI systems sourced from third-party vendors, the practical implications are straightforward:

• Responsible Sourcing and Use

• Risk Assessment

A significant general requirement embedded across all categories, including minimal-risk AI, is the obligation for AI providers and deployers to ensure adequate AI literacy among their staff. This ensures informed oversight and contributes significantly to responsible and ethical AI practices across the organization.

Soource and Compliance with the AI Act

At Soource, we use artificial intelligence as an operational tool designed to support internal activities and enhance the efficiency of our users. Our technology leverages General Purpose AI models that enable us to offer specific functionalities such as semantic search, advanced data analysis, email drafting support, and rapid information summarization.

A key aspect of our approach is that no decision-making or communication activity is automated without the direct and active supervision of our users. Every AI-generated output is always subject to final human review and validation before being used or shared externally. At all times, our users retain full control over operational decisions and final interactions with clients or external stakeholders.

Our commitment to constant human oversight is a central element in mitigating potential risks and ensuring the responsible use of AI technologies.

To learn more about our AI Act compliance strategy, feel free to contact us. We’d be happy to walk you through our approach to ethical and responsible use of artificial intelligence.

FAQ

We have collected the most frequently asked questions here, but don't hesitate to contact us if you have any doubts or want to know more about Soource.

Is Soource compatible with my ERP (Enterprise Resource Planning or Procurement Suite)?

Yes, Soource is compatible with major ERP and procurement software like SAP Ariba, Jaggaer and other enterprise systems. You can integrate it for bidirectional data exchange or use it as a plug & play solution, without the need for integration.

Are my data on Soource protected and secure?

Absolutely. All corporate, personal and supplier data remains your property and is processed according to GDPR and European regulations. Generic data is anonymized to improve platform and Soource community performance.

Where does supplier data on Soource come from?

Soource's database combines:

- Certified and public sources

- Data collected from intelligent web crawling

- Information automatically extracted from corporate emails

Today Soource offers the largest database of Italian companies with direct email contact.

Can I attach files to RFI, RFQ or RFP on Soource?

Yes, you can attach documents in any format to your requests (RFI - Request for Information, RFQ - Request for Quotation or RFP - Request for Proposal). In particular, for RFIs, it is recommended to include a technical specification sheet of the product to get more detailed responses from suppliers.

Can I send follow-ups to suppliers with Soource?

Yes. With Soource you can filter suppliers who haven't responded and send a mass follow-up in one click. This helps you double the response rate, up to 80% on average, improving RFI, RFP and RFQ management.

Is Soource compliant with the European AI Act?

Yes. Soource's artificial intelligence is compliant with the European Union's new AI Act, falling into the zero-risk category, as it only supports internal activities and does not automate outward processes or critical decisions.

Why use Soource for RFI and RFP?

With Soource you can:

- Create RFI (Request for Information) and RFP (Request for Proposal) in just a few clicks

- Send them automatically to selected suppliers

- Analyze responses with artificial intelligence

- Extract and compare certifications, availability, technical capabilities

You save time and identify the best suppliers more quickly.

Can I also use Soource for RFQ?

Yes. Soource also supports RFQ (Request for Quotation) to request economic offers. The platform automates:

- RFQ sending

- Response reception and reading

- Comparison of prices and conditions